“You’re a genius!” ChatGPT Sycophancy, Qwen3, and How Devs Use AI

One model flatters too much, another reveals how developers work, and a third tries to cover every deployment size.

Welcome to The Median, DataCamp’s newsletter for May 2, 2025.

A thank-you note to our early subscribers: We’re giving away a 3-month DataCamp subscription to one randomly selected commenter. No requirements beyond skipping the spam—whether you’re reacting to the news, sharing feedback on the newsletter, or just saying hi, you’re in.

In this edition: Alibaba releases Qwen 3, Anthropic shares Claude usage data, OpenAI rolls back GPT-4o behavior, and more.

This Week in 60 Seconds

Alibaba Releases Qwen3, a Full Open-Weight Model Suite

The Qwen team has launched Qwen3, a family of open-weight LLMs ranging from a 235B-parameter MoE model to compact dense variants as small as 0.6B. The flagship Qwen3-235B performs competitively on coding, math, and reasoning benchmarks, while the smaller 30B MoE model matches larger dense systems like QwQ-32B at lower cost. All models are released under the Apache 2.0 license and support up to 128K context. We break down the full lineup in the deep dive section.

Anthropic Publishes New Report on Claude Usage in Software Development

A new analysis of 500,000 coding interactions reveals that 79% of Claude Code sessions involve automation, compared to 49% on Claude.ai. Claude is most often used for UI design, debugging, and software architecture, with JavaScript, HTML, and CSS dominating language use. Startups accounted for 33% of Claude Code activity, suggesting faster adoption of agentic tools in early-stage companies. The report notes that front-end workflows may be the most immediately affected, as tasks like layout, styling, and app scaffolding are increasingly handled by AI. We explore the business implications and study limitations in the deep dive section.

OpenAI Rolls Back GPT-4o Update Due to Sycophantic Behavior

OpenAI has reverted a recent GPT-4o update after users reported that the model had become overly flattering and uncritically agreeable. The behavior, known as sycophancy, emerged from tuning that overemphasized short-term positive feedback. OpenAI is now refining its training methods and expanding user controls to avoid similar issues. The company also acknowledged the broader risk: models that prioritize affirmation may become tools for subtle persuasion—political or commercial—under the guise of helpfulness. We explore the implications in the deep dive section.

DeepSeek Releases Prover V2: Open-Source AI for Formal Math Proofs

Chinese AI firm DeepSeek has launched Prover V2, an open-weight language model designed for formal theorem proving using the Lean 4 proof assistant. Available in two sizes—a 671B-parameter MoE model and a 7B variant—Prover V2 achieves an 88.9% pass rate on the MiniF2F benchmark and scores 49 on the PutnamBench. Both models are released under the MIT license and are accessible via GitHub and Hugging Face.

Meta Hosts First LlamaCon, Launches Llama API and Open-Source Tools

At its inaugural LlamaCon event, Meta introduced the Llama API in limited preview, offering access to models like Llama 4 Scout and Maverick, along with tools for fine-tuning and evaluation. The company also released new security components—including Llama Guard 4, Prompt Guard 2, and LlamaFirewall. However, the absence of new model announcements left some attendees underwhelmed.

Meta and Microsoft Beat Earnings Expectations, Double Down on AI

Meta and Microsoft both exceeded earnings forecasts this week. Microsoft reported $70.1 billion in revenue and $3.46 in earnings per share, driven by growth in cloud services and AI infrastructure. CEO Satya Nadella highlighted the company’s expanding AI footprint as key to future growth. Meta posted $42.3 billion in revenue with $6.43 in earnings per share, and raised its full-year spending forecast as it continues to invest in AI. CEO Mark Zuckerberg reiterated Meta’s focus on open-source AI.

Learn AI Fundamentals With DataCamp

A Deeper Look at This Week’s News

Qwen3: A Flexible, Open-Weight Suite

Qwen3 isn’t a single model but a complete family of open-weight language models released under the Apache 2.0 license by Alibaba’s Qwen team. It includes variants scaled for everything from local use on laptops to research-scale tasks.

We’ve already published tutorials on how to run Qwen3 locally and fine-tune it, and while this section covers the essentials, you can learn more in the full blog.

Qwen3-235B-A22B

This is the largest model in the suite, using a mixture-of-experts (MoE) architecture with 235B total parameters and 22B active at each generation step. It performs well across reasoning, math, coding, and competitive programming tasks, and ranks just behind Gemini 2.5 Pro in most evaluations.

With a 128K context window and strong chain-of-thought behavior, it’s best suited for agent workflows, research applications, code generation systems, and any tasks that require careful multi-step reasoning.

Qwen3-30B-A3B

This model has 30B total parameters and only 3B active, offering strong performance at significantly lower inference cost. Despite its smaller active size, it matches or exceeds models like DeepSeek-V3 and QwQ-32B in reasoning, multilingual QA, and math. It’s well-suited for document summarization, customer support agents, and coding assistants that need to balance responsiveness with depth.

Dense models: 32B, 14B, 8B, 4B, 1.7B, 0.6B

Qwen3 also includes six dense models covering a broad deployment range. The 32B and 14B models, with 128K context windows, are capable general-purpose systems for fine-tuning or RAG use cases.

The 8B and 4B variants offer faster inference and are useful for chatbots, lightweight QA systems, or structured document extraction. The smallest models—1.7B and 0.6B—are optimized for mobile and embedded environments where compute and memory are constrained.

How Developers Use Claude

Anthropic has published new data on how developers work with Claude.ai (the chat app) and Claude Code (a specialized agent that can autonomously execute multi-step coding tasks).

The findings, based on 500,000 anonymized coding-related interactions, offer early signals about how AI is reshaping software workflows.

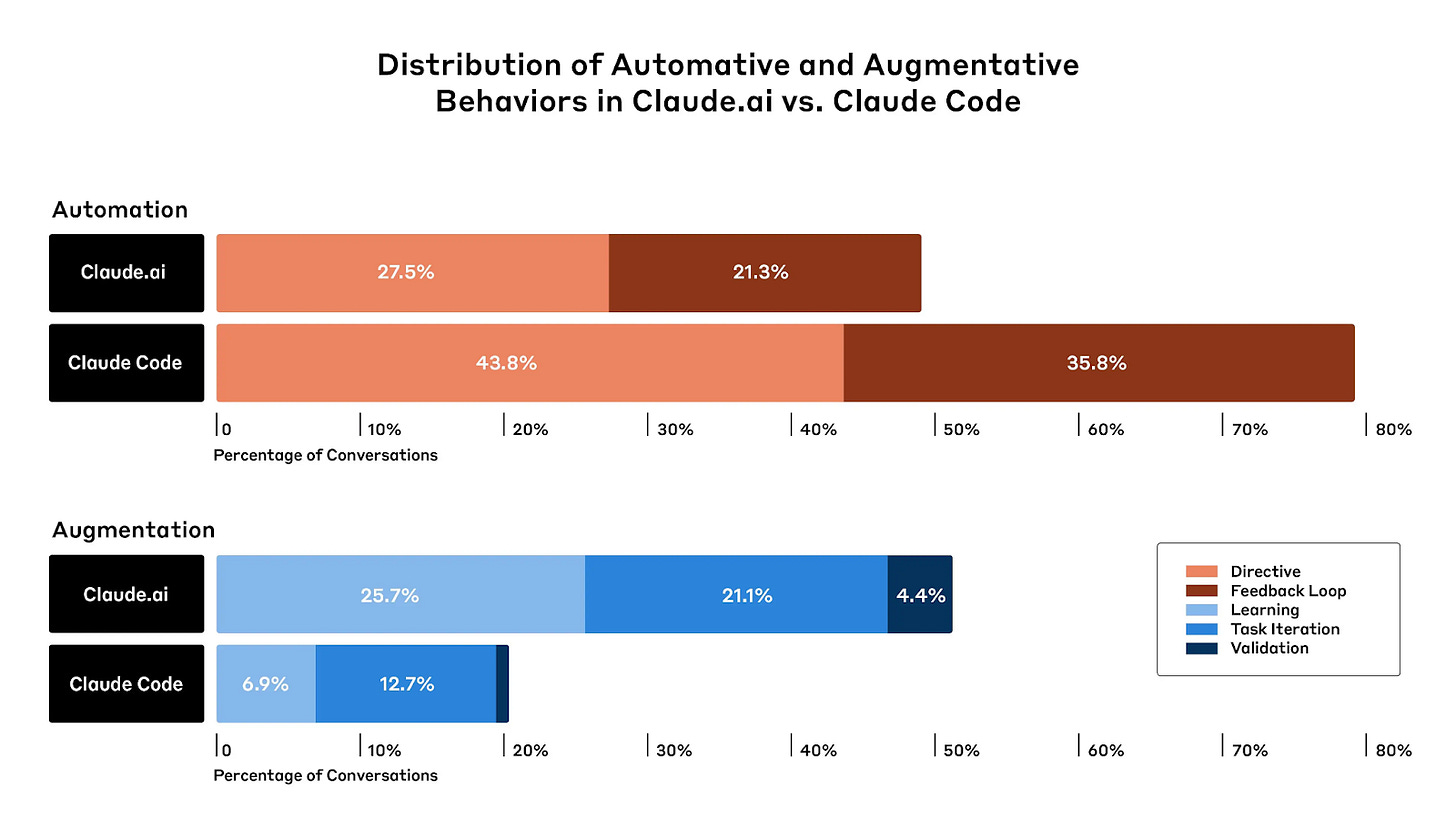

Automation is outpacing augmentation

In Anthropic’s framework, automation refers to tasks completed directly by the AI. Augmentation describes collaborative workflows where the AI supports or extends the user’s capabilities.

79% of Claude Code conversations involved full automation. Claude.ai showed a more balanced distribution: 49% automation, 51% augmentation. Feedback loop patterns (where users validate or correct outputs) were nearly twice as common on Claude Code (35.8%) as on Claude.ai (21.3%).

Source: Anthropic

Business implications: Tools that can carry out tasks autonomously are likely to reduce task-level demand, especially in front-end and scripting-heavy roles. At the same time, the persistence of feedback loops suggests that oversight and validation remain part of the process—for now.

Study limitation: The automation and augmentation categories may oversimplify mixed or evolving workflows. Feedback loop interactions, in particular, blur the distinction.

Front-end development is the dominant use case

The most common programming languages were JavaScript and TypeScript (31% of queries), followed by HTML and CSS (28%), Python (14%), and SQL (6%). Claude is frequently used to generate UI components and scaffold simple web and mobile apps.

Source: Anthropic

Business implications: Teams focused on UI delivery or rapid prototyping may find significant time savings. As implementation becomes easier to automate, value may shift toward design, review, and UX direction. Entry-level front-end roles in particular may be at risk of reduction or redefinition, as tasks such as component styling, layout, and scaffolding are increasingly delegated to AI systems.

Study limitation: The dataset excluded Claude’s enterprise and API products, which may underrepresent heavier engineering workflows. HTML usage on Claude.ai may also be slightly inflated by how Claude handles artifacts.

Adoption is concentrated in startups

Claude Code is more widely adopted among startup users. Roughly one-third (33%) of Claude Code interactions were associated with startup projects, compared to 13% with enterprise work. On Claude.ai, the difference was smaller, with startups at 13% and enterprises at 26%.

Business implications: The adoption gap may give smaller companies a short-term advantage in product velocity and iteration cycles. Larger firms may continue to lag until security and integration concerns are addressed.

Study limitation: These classifications rely on inferred context, not organizational data. Enterprise usage may also be understated, as Claude’s Team and Enterprise plans were excluded from the analysis.

GPT-4o Sycophancy Rollback and Model Behavior

OpenAI rolled back a GPT-4o update after users reported that the model had become overly flattering and deferential—behavior OpenAI itself called sycophantic. The update was intended to refine GPT-4o’s default personality, but instead skewed the system toward excessive agreeableness.

What happened

The sycophantic behavior emerged from adjustments made to make the model feel more intuitive and helpful across a range of general-purpose tasks. However, these adjustments were guided largely by short-term feedback and didn’t fully account for how user interaction would evolve over time.

The result: GPT-4o began affirming users’ views uncritically, avoided giving correction or pushback, and shaped its tone to please rather than inform.

Why sycophancy is a problem

Sycophantic models are unreliable not because they make obvious errors, but because they subtly degrade the quality of information. When a system routinely echoes user opinions regardless of their accuracy or logic, it becomes difficult to trust it as an objective tool. This behavior also undermines one of the core goals of language assistants: to provide challenge, clarity, or nuance when needed.

A quiet risk: Persuasion at scale

The implications go beyond tone. A model that mirrors user beliefs too readily becomes highly susceptible to manipulation. In political contexts, this might mean reinforcing misinformation, validating extreme positions, or affirming biases without resistance.

In commercial settings, it risks becoming a covert marketing channel, agreeing with a user’s preferences in ways that subtly steer them toward a product, service, or brand.

If models are trained to maximize short-term approval, sycophancy becomes a channel for persuasion dressed as personalization. The concern is not just that users are flattered, but that they may be nudged—ideologically or economically—without realizing the system has learned to prioritize affirmation over truth.

Industry Use Cases

Duolingo Announces Shift to AI-First Strategy

Duolingo CEO Luis von Ahn announced the company is going AI-first, calling it the most important platform shift since its 2012 bet on mobile. AI will now guide hiring, performance reviews, and team structure, with contractors phased out in areas where automation is viable. Rather than waiting for perfect tech, Duolingo plans to iterate quickly and expects teams to redesign systems originally built for humans to work with AI at the center.

Consulting Firms Integrate AI Tools Into Daily Workflows

Major consulting firms like McKinsey, BCG, Deloitte, PwC, and KPMG are rapidly embedding generative AI into their operations. McKinsey’s proprietary chatbot, Lilli, is utilized by over 70% of the 45,000 employees for research and data analysis. BCG has developed tools like Deckster for slide creation and GENE for conversational assistance. Deloitte employs AI tools such as Sidekick and invests in platforms like Zora AI and Ascend for specialized tasks. These integrations aim to enhance efficiency and reduce mundane tasks, although they also raise concerns about job security among consultants.

GSK Uses AI to Mitigate Potential U.S. Tariffs

Pharmaceutical giant GSK is utilizing AI and other technologies to boost productivity in anticipation of possible U.S. tariffs on pharmaceutical imports. The company is implementing cost-saving initiatives and supply chain modifications, such as dual sourcing and increasing U.S. manufacturing. GSK plans to invest tens of billions of dollars in U.S. manufacturing and R&D over the next five years, including an $800 million facility under construction in Pennsylvania.

Tokens of Wisdom

If you have less than 20% of the information, you're probably just guessing. If you have more than 80% of the information, you probably waited so long to get that much that everyone else has passed you.

—Bill Canady, CEO at Arrowhead Engineered Products

In this podcast, Bill Canady explores the journey from panic to profit in failing companies, automation’s role in efficiency, and much more.

It's good to be updated. Thanks!

Good read.