An OpenAI Social App? GPT-4.1, O3, O4-Mini, and Gemini 2.5 Flash

OpenAI explores social features, ships new models, and Google previews Gemini 2.5 Flash while debuting Veo 2 in Gemini.

Welcome to The Median, DataCamp’s newsletter for April 18, 2025.

A thank-you note to our early subscribers: We’re giving away a 3-month DataCamp subscription to one randomly selected commenter. No requirements beyond skipping the spam—whether you’re reacting to the news, sharing feedback on the newsletter, or just saying hi, you’re in.

In this edition: new OpenAI models, a social app prototype, and Google DeepMind’s latest moves in video, reasoning, and prompt engineering.

This Week in 60 Seconds

OpenAI Releases GPT-4.1, O3, O4-Mini, and Codex

GPT-4.1 is aimed at developers and is only available via API in Standard, Mini, and Nano variants, offering 1 million token context, stronger code generation, and more controllable outputs. It will phase out GPT-4.5 by July. o4-mini brings fast, multimodal reasoning with full tool use at a fraction of o3’s cost, making it a compelling option in ChatGPT. The o3 model remains the flagship for high-compute reasoning tasks. Codex, now open-sourced as a CLI agent, can interpret natural language commands and execute them across more than a dozen programming languages.

OpenAI Is Reportedly Building a Social Media Platform

OpenAI is reportedly developing a new social media platform centered around AI-generated content, aiming to rival Elon Musk’s X (formerly Twitter) and Meta’s offerings. An internal prototype features a social feed focused on ChatGPT’s image generation capabilities, though it’s unclear whether this will be a standalone app or integrated into ChatGPT. This initiative could provide OpenAI with a continuous stream of user-generated data to enhance its models, while also solidifying its position as a direct competitor to Big Tech.

Gemini 2.5 Flash Enters Preview

Google launched Gemini 2.5 Flash in preview, a faster, leaner reasoning model designed for developers building real-time apps. It introduces “thinking budgets,” letting users cap how much the model reasons before responding—balancing cost, speed, and quality. Developers can set token limits, disable thinking entirely, or let the model decide how deeply to reason based on task complexity. Despite its lightweight build, Flash ranks just behind Gemini 2.5 Pro on complex benchmarks and improves performance over 2.0 Flash without raising latency.

Veo 2 Now Available in Gemini

Google has made Veo 2 available in Gemini Advanced, allowing users to generate eight-second, 720p videos from text prompts with improved realism and fluid motion. The model better understands physics and human movement, enabling cinematic scenes across a variety of styles and subjects. This feature isn’t available to users signed in with a work or school Google Account, and access is rolling out gradually—so it may not be available to all users just yet.

Google Publishes Prompt Guide

Google has released a 69-page prompt engineering guide authored by Lee Boonstra, offering practical strategies for crafting effective prompts in large language models like Gemini. The guide covers techniques such as zero-shot, few-shot, and chain-of-thought prompting, as well as advanced methods like ReAct and Tree-of-Thoughts. It also provides best practices for developers, including prompt structuring, output formatting, and sampling configuration. You can download from this link.

A Deeper Look at This Week’s News

Two Advanced Prompting Techniques

This week, Google released a prompt engineering Guide (written by Lee Boonstra), and it’s packed with hands-on techniques that go beyond the usual zero- or few-shot setups. Two standout methods—Tree of Thoughts (ToT) and ReAct—offer a glimpse into where prompting is headed as we move toward AI agents and planning systems.

Tree of Thoughts (ToT)

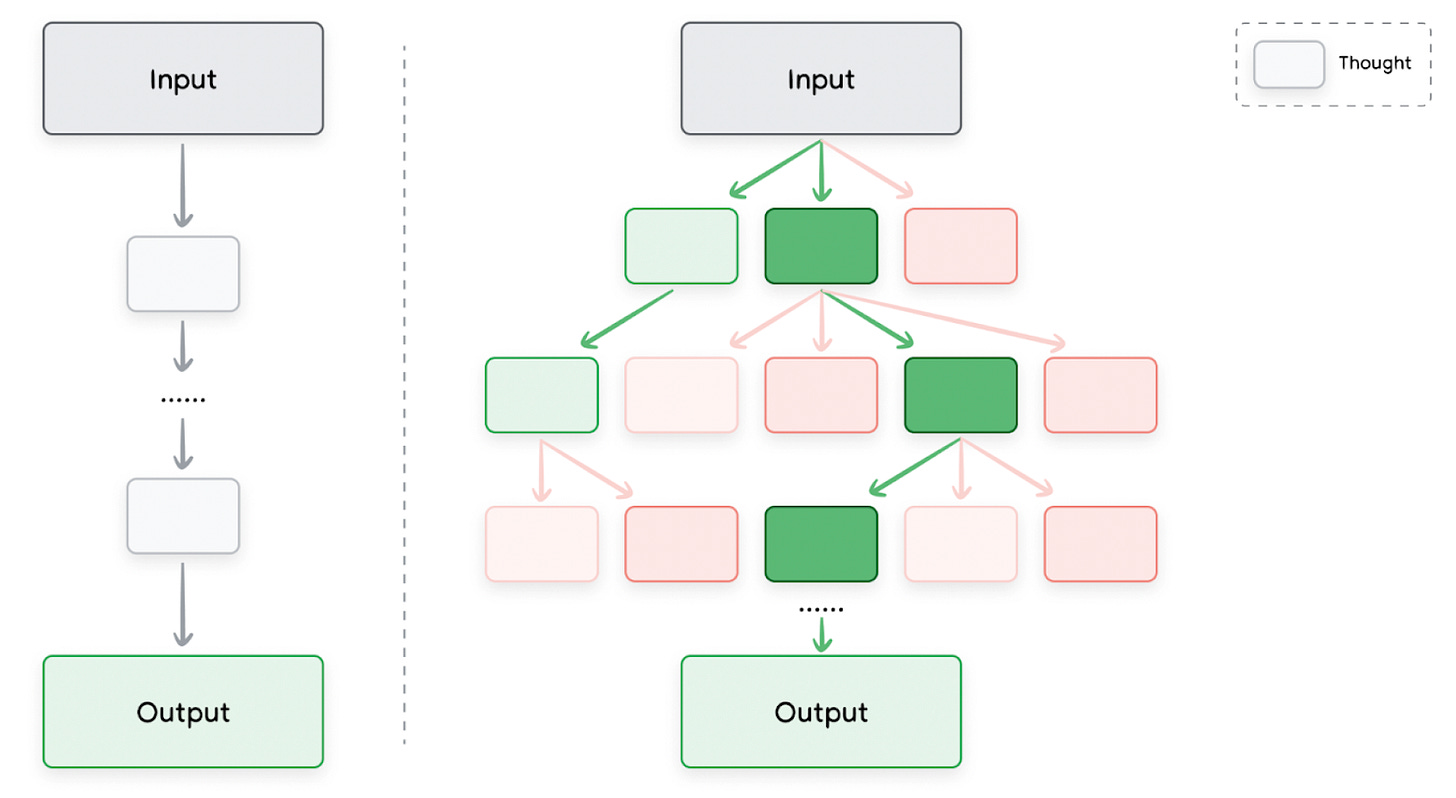

Tree of Thoughts (ToT) builds on Chain of Thought (CoT) prompting by turning linear reasoning into a branching exploration. Instead of guiding the model down one reasoning path, ToT keeps a tree of multiple ideas or approaches and explores them in parallel. This makes it better suited for complex decision-making, where multiple lines of reasoning might need to be compared or pruned along the way.

Source: Prompt Engineering (Lee Boonstra)

ReAct

ReAct (short for Reason + Act) combines step-by-step thinking (the “reason” part) with external actions (the “act” part), like making API calls or running searches. Instead of solving a problem all in one go, the model reasons about what it needs, performs actions (like querying Google), observes the results, and adjusts its thinking. ReAct agents can iteratively refine their answers, making them especially useful for tasks that involve external knowledge or dynamic environments.

You can explore more prompting techniques in the full guide here.

Why Is OpenAI Building a Social Platform?

Earlier this week, reports surfaced that OpenAI is quietly working on a social media platform powered by generative AI—a move that would pit it against X (formerly Twitter), Meta’s Instagram and Threads, and even Reddit, depending on the form this takes.

We think there are two important questions here:

What might the platform look like?

What does OpenAI actually want from building it?

What Might the Platform Look Like?

Right now, most AI interactions happen in private: users enter a prompt, get a result, and move on. A social platform shifts that into something more persistent and collaborative. Instead of just using AI for productivity or experimentation, users would start sharing outputs, seeing what others are generating, and building on top of it.

There are still open questions about format and integration. Would it be a separate app or part of ChatGPT? Would it include code, text, or just visuals? Will users be able to post original, human-authored content like on X or Instagram, or will the feed be limited to outputs generated within ChatGPT? That said, a few things seem likely based on current patterns:

Multimodal-first: Expect images, maybe video or audio next, especially as OpenAI continues building visual capabilities into its models.

Prompt transparency: Shared generations might include prompt cards, letting users copy, edit, or fork others’ creations directly.

Searchable and remixable: Like prompt collections or image galleries, the platform could focus on learning by example—showcasing techniques and outputs users can learn from and adapt.

What Does OpenAI Actually Want From This?

OpenAI’s move into social media appears to be driven by a desire to gather real-world data on how users interact with AI-generated content. By observing which prompts are popular, how users engage with outputs, and what content is shared or remixed, OpenAI can gain valuable insights to improve its models.

Additionally, this platform could serve as a source of fresh, user-generated data, which is important for training and refining AI models. This could also improve ChatGPT’s ability to answer questions about current events, trends, and emerging topics more effectively.

There’s also a strategic aspect: as competitors like Meta and xAI integrate AI into their platforms, OpenAI may seek to establish its own space where AI is central to user interaction.

OpenAI Releases GPT-4.1, o3, o4-mini, and Codex

This week, OpenAI introduced four major updates across its model and tooling lineup: GPT-4.1, o3, o4-mini, and the return of Codex in a command-line interface. Each release serves a different user segment—from developers needing tight control over outputs to ChatGPT power users and local-first coders. Here’s what each one brings.

GPT-4.1

GPT-4.1 is OpenAI’s newest developer-focused model and is available exclusively through the API in Standard, Mini, and Nano tiers. It supports up to a 1 million token context window, making it a fit for document-heavy workloads, extended conversations, or multimodal tasks requiring persistent memory. Improvements include better instruction following, stronger code output, and more reliable formatting. OpenAI plans to retire GPT-4.5 by July, signaling that 4.1 is now the flagship model for production applications.

O3

While GPT-4.1 is API-only, o3 remains OpenAI’s most capable reasoning model accessible via ChatGPT. It excels on challenging benchmarks like ARC AGI and Frontier Math. o3 is ideal for high-compute use cases where reliability across reasoning steps matters—think math, logic-heavy workflows, or multimodal problem-solving. It also supports tools like file browsing and code execution. However, it comes at a higher latency and cost than newer variants.

O4-Mini

O4-mini, also available through the ChatGPT app, offers full tool use, multimodal input, and fast response times at a fraction of o3’s price. While it doesn’t quite match o3 on raw reasoning benchmarks, it handles most real-world tasks well—especially ones involving visual input, chat-style coding, or document Q&A. For users who want speed, affordability, and strong baseline performance, o4-mini is likely to become the go-to default.

Codex

OpenAI also brought back Codex in the form of an open-source command-line interface tool. This version doesn’t just interpret natural language to code—it also supports images and screenshots as input and can execute local workflows with a degree of autonomy. Developers can use it to write scripts, translate sketches into code, or interact with files—all without needing to rely on the cloud.

Industry Use Cases

Perplexity to Power AI on Motorola and Possibly Samsung Devices

Perplexity is expanding from browser-based Q&A into hardware: the company has struck a deal with Motorola to preload its assistant on new Razr foldables as an alternative to Google Gemini, complete with a custom interface. The move marks Perplexity’s first direct integration into consumer devices. Samsung is also in early talks to bring Perplexity to Galaxy phones, either as a preloaded app or default assistant option—potentially challenging Google’s dominance on Android.

Goldman Sachs Expands Internal AI Platform

Goldman Sachs has rolled out its in-house AI assistant to 10,000 employees, with plans to extend access across the firm by year-end. Built on models from OpenAI and Google, the assistant is wrapped in internal security layers to handle sensitive data. It’s being used for everything from summarizing research and translating documents to drafting code and preparing presentations. While senior staff use it for brainstorming and communication, junior analysts rely on it for writing support and data analysis. The bank reports clear productivity gains—especially in multilingual workflows.

LinkedIn COO Shares Personal AI Use Cases

Dan Shapero, Chief Operating Officer of LinkedIn, has detailed how he incorporates AI into his daily workflow. He utilizes Microsoft Teams’ Copilot to summarize meetings he cannot attend, ChatGPT to quickly learn about unfamiliar topics, and LinkedIn’s Account IQ tool to prepare for meetings by compiling relevant company data. Shapero also employs AI-assisted chatbots to refine his writing and presentation skills. While he acknowledges AI’s benefits in enhancing productivity, he emphasizes that human judgment remains the most important in areas like leadership and candidate recruitment.

Tokens of Wisdom

LLMs are not agentic—at any moment in time, an LLM doesn’t know that it’s in the process of solving a problem. It doesn’t make it agentic just because you proclaim it is.

—Andriy Burkov, Author of The Hundred-Page Language Models Book

Andriy Burkov discusses common misconceptions about artificial intelligence in the latest episode of the DataFramed podcast:

Great summary for the week! I liked the quick explanation of the prompts from the prompt guide and breakdown of an OpenAI social network.

I love your summary