Scaling Models or Scaling People? Llama 4, A2A, and the State of AI in 2025

Meta launches Llama 4, OpenAI resets its roadmap, Google introduces agent protocol, and new reports chart AI adoption.

Welcome to The Median, DataCamp’s newsletter for April 11, 2025.

A thank-you note to our early subscribers: We’re giving away a 3-month DataCamp subscription to one randomly selected commenter. No requirements beyond skipping the spam—whether you’re reacting to the news, sharing feedback on the newsletter, or just saying hi, you’re in.

In this edition: Meta releases Llama 4, OpenAI reshuffles releases, Google connects agents, and two new reports offer a snapshot of AI in 2025.

This Week in 60 Seconds

Meta Releases Llama 4, Criticism Follow

Meta has released the Llama 4 series, introducing Scout and Maverick—two models employing a mixture-of-experts architecture. Scout offers a 10M context window, while Maverick is designed to compete with models like GPT-4o and Gemini 2.0 Flash in tasks such as chat, coding, and reasoning. Additionally, Meta previewed Llama 4 Behemoth, a model still in training, boasting 288 billion active parameters out of nearly 2 trillion total. However, the launch has faced criticism over benchmark discrepancies.

OpenAI’s o3 and o4-mini Coming Soon

OpenAI CEO Sam Altman announced a change in the company’s release schedule. The o3 and o4-mini models are now set to launch in the coming weeks, with GPT-5 expected in a few months. Altman cited integration challenges and the need to ensure sufficient capacity for anticipated high demand as reasons for the adjustment.

Google Releases New AI Agent Tools

At Cloud Next 2025, Google introduced two new tools for developers working on autonomous systems: the Agent Development Kit (ADK), an open-source framework for building controllable AI agents, and Agent2Agent (A2A), a protocol designed to standardize communication between agents built on different platforms.

Stanford Releases 2025 AI Index Report

Stanford’s Human-Centered AI Institute has published the 2025 edition of its annual AI Index, a comprehensive report tracking global trends in artificial intelligence. The report compiles data across research, industry, public perception, ethics, and policy to provide a snapshot of how AI is evolving worldwide. This year’s edition includes metrics on model performance, investment flows, regulatory efforts, AI safety incidents, and geopolitical dynamics—making it one of the most cited and data-rich resources for understanding the state of AI today. You can read the full report here.

DataCamp Releases 2025 Data & AI Literacy Report

DataCamp has published its 2025 State of Data & AI Literacy Report, offering insights from over 500 business leaders on how organizations are addressing skill gaps in data and artificial intelligence. The report covers trends in hiring, upskilling, and perceived readiness, and highlights growing demand for AI literacy across industries. It also includes recommendations for building more responsible and data-savvy workforces. You can read the full report here.

Learn Data and AI Skills With DataCamp

A Deeper Look at This Week’s News

Meta Releases Llama 4

Meta has released the Llama 4 suite of open-weight models, introducing two variants—Scout and Maverick—alongside a third still in training, Llama 4 Behemoth. Below, we’ll brief you on the essentials, and you can also expand your learning with these tutorials that we’ve put together this week:

The Llama 4 Suite

Meta’s Llama 4 suite includes three models built on a mixture-of-experts architecture: Scout, Maverick, and the still-unreleased Behemoth.

Scout is the most lightweight, designed for efficiency and long-context tasks. It features 17 billion active parameters (109B total) and supports a 10 million-token context window—the largest in any open-weight model to date. Trained on text, image, and video, Scout performs well on coding and visual reasoning benchmarks and runs on a single H100 GPU.

Maverick is the generalist in the lineup, aimed at broad-use cases like chat, coding, and multimodal reasoning. It also activates 17 billion parameters, but from a larger pool of 128 experts, totaling 400B. Meta refined Maverick through curriculum sampling, prompt filtering, and co-distillation from Behemoth to improve quality without increasing cost.

Behemoth, meanwhile, is still in training. It activates 288 billion parameters from a total of nearly 2 trillion, requiring new infrastructure and a custom post-training process. Though not released, it plays a central role in training the smaller models and may eventually support community distillation work.

Criticism

The Llama 4 release was quickly overshadowed by controversy around Meta’s benchmark practices. Shortly after launch, researchers discovered that the version of Llama 4 Maverick submitted to LMArena—where it briefly ranked second overall—was not the same as the publicly released model. Meta had submitted a version of Maverick “optimized for conversationality.”

LMArena responded by updating its leaderboard policies:

Meta’s interpretation of our policy did not match what we expect from model providers. Meta should have made it clearer that “Llama-4-Maverick-03-26-Experimental” was a customized model to optimize for human preference. As a result of that we are updating our leaderboard policies to reinforce our commitment to fair, reproducible evaluations so this confusion doesn’t occur in the future.

—Source: X

Following more user reports regarding inconsistent quality across platforms and technical problems, Ahmad Al-Dahle, Meta’s VP of Generative AI, explained on X that the models were released “as soon as they were ready,” and that it would take several days for public deployments to stabilize. Meta is “working through bug fixes and onboarding partners,” he said, while reaffirming that the discrepancies are not due to training on test sets—a claim he dismissed as “simply not true.”

AI Performance vs. AI Adoption in 2025

The AI arms race has long been framed as a battle for technological supremacy. Who will build the biggest models? Who will crack the hardest coding problems? Who will unlock the secrets to true artificial general intelligence (AGI)?

While these are critical questions, an alternative perspective is also worth considering: the true victor in the AI age will be the society that best integrates this transformative technology into the fabric of its daily life, from boardrooms to classrooms to doctor's offices.

Let’s use this angle to explore Standford’s AI Index Report 2025 and DataCamp’s report on The State of Data & AI Literacy 2025. These are both vast reports, and we encourage you to read them in full.

The U.S. Leads, China Closes In

The United States remains the undisputed leader in producing cutting-edge AI models. In 2024, US-based institutions produced 40 notable AI models, significantly outpacing China's 15 and Europe's mere three. Moreover, U.S. private AI investment hit $109.1 billion in 2024, nearly 12 times higher than China's $9.3 billion and 24 times the U.K.'s $4.5 billion.

While the United States holds the edge in raw technological power, China is rapidly closing the performance gap, especially through strong open-source models like DeepSeek-R1.

Who Believes in AI?

Public sentiment toward AI is a major factor driving adoption. It’s tilting toward optimism, but not everywhere and not equally. In China, Indonesia, and Thailand, sweeping majorities (83%, 80%, and 77%, respectively) view AI products and services as more beneficial than harmful. By contrast, Western countries remain distinctly more wary. In Canada, the United States, and the Netherlands, only around four in ten respondents express such optimism.

Still, the mood is not fixed. Since 2022, perceptions have softened across several historically skeptical countries. Optimism has risen by ten percentage points in both Germany and France and by eight points in Canada and Britain. In the United States, the country with the best AI technology, optimism has nudged up by only four points.

However, as we shift to the enterprise sector, the positive sentiment towards AI is significantly higher in countries like the US or UK. According to DataCamp’s report, 82% of leaders state that AI is used at least once a week.

However, AI adoption still faces many challenges, like lack of budget, inadequate training resources, or employee resistance.

Why Is Google’s Agent2Agent (A2A) so Important?

At Google Cloud Next 2025, Google introduced the Agent2Agent (A2A) protocol, an open standard designed to facilitate communication between AI agents, regardless of their underlying frameworks or vendors. This initiative addresses the growing need for interoperability in increasingly complex, multi-agent systems.

How Agent2Agent Works

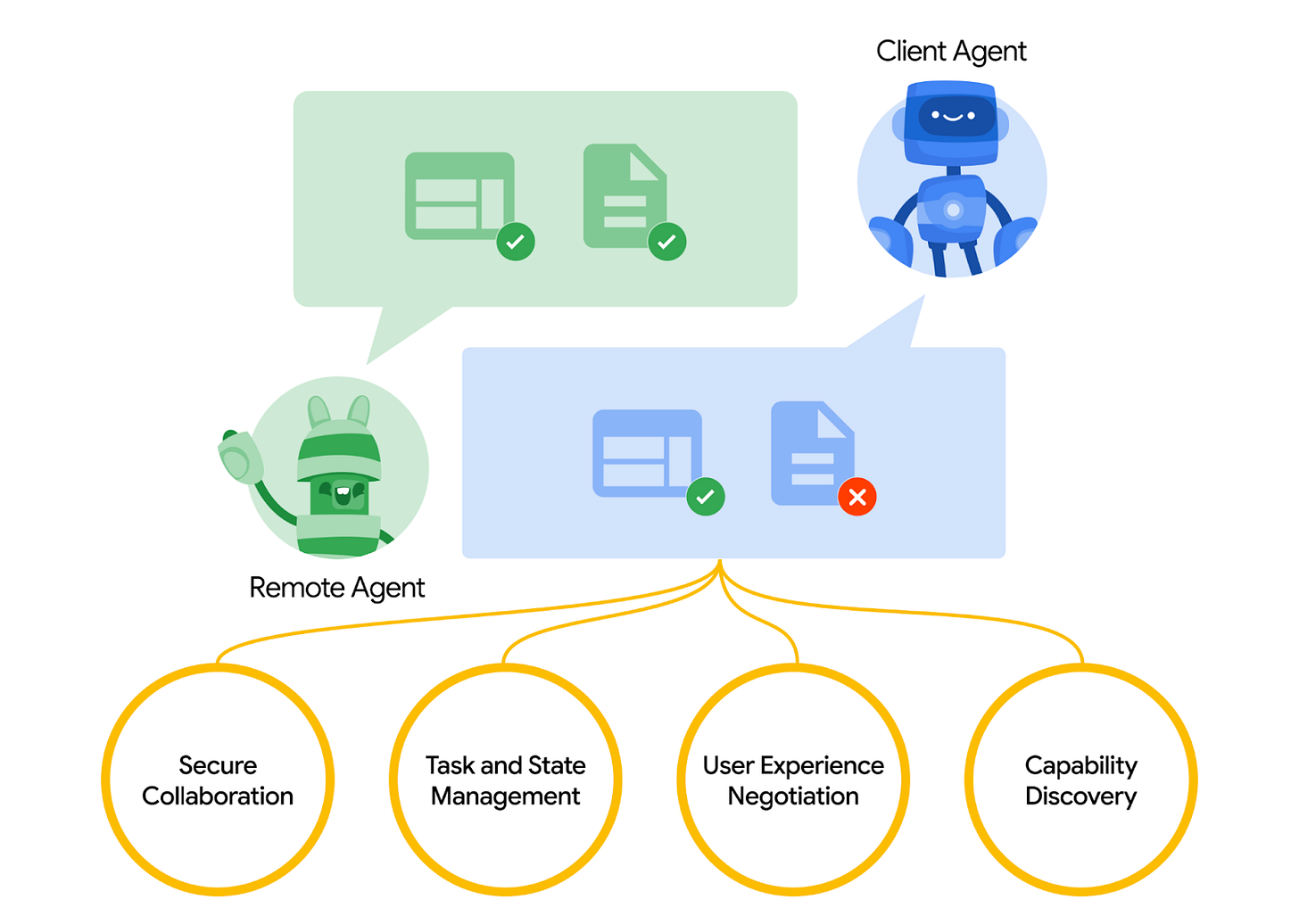

To understand how A2A works, let’s take a look at the diagram below. The Client Agent (in blue) initiates a request—for example, to process documents or complete a workflow—but may lack the capability to do so alone. The Remote Agent (in green) steps in to help, offering its own set of verified abilities. Through A2A, the two agents negotiate which tasks can be handled, coordinate their respective roles, and resolve which features are unsupported.

Source: Google

A2A supports this exchange through four key mechanisms:

Secure collaboration: Agents authenticate each other and ensure that any exchanged data is handled securely.

Task and state management: A shared structure defines the lifecycle of a task—creation, handoff, progress updates, and completion.

User experience negotiation: Agents agree on how they should present outputs or interface with humans—be it through text, forms, or voice.

Capability discovery: Each agent shares a machine-readable “agent card” detailing what it can do, allowing for automatic matchmaking across systems.

Complementing the Model Context Protocol (MCP)

While A2A focuses on agent-to-agent communication, it complements the Model Context Protocol (MCP) developed by Anthropic. MCP standardizes how AI models access external data sources and tools, facilitating context-aware operations. In essence, MCP connects models to data, whereas A2A connects agents to each other. Together, MCP and A2A could form the backbone of industry standards in the years ahead.

Industry Use Cases

Uber Freight Optimizes Trucking Logistics

Uber Freight is utilizing AI to enhance trucking efficiency by reducing empty miles—instances where trucks travel without cargo. Approximately 35% of trucks on U.S. highways operate empty, leading to increased costs and carbon emissions. Uber Freight’s AI-driven platform matches truckers with continuous loads, minimizing these inefficiencies. The system employs machine learning algorithms that consider factors such as traffic, weather, and road conditions to provide upfront pricing and optimal routing. Since its 2023 launch, Uber Freight has managed over $20 billion in freight, serving numerous Fortune 500 companies, and has reduced empty miles by 10–15%.

WBD Transforms Sports Broadcasting

Warner Bros. Discovery (WBD) Sports Europe, in collaboration with Amazon Web Services, has launched the Cycling Central Intelligence (CCI) platform, an AI-powered system aimed at improving mountain bike sports coverage. Debuting at the 2025 WHOOP UCI Mountain Bike World Series in Araxá, Brazil, CCI provides instant access to extensive data on riders, venues, and race histories. The platform utilizes technologies like Amazon Bedrock and Anthropic’s Claude 3.5, enabling natural language queries and data synthesis. This innovation enhances commentators’ storytelling capabilities by offering real-time data, thereby enriching the viewer experience.

Adobe Plans Agentic AI Across Creative Suite

Adobe has shared its roadmap for bringing agentic AI to tools like Photoshop, Acrobat, Premiere Pro, and Express. The company’s vision is to support users—not replace them—by building intelligent agents that handle repetitive tasks, offer creative suggestions, and help users learn tools more efficiently. In Acrobat, future agents will act as research or sales assistants that analyze documents and suggest follow-ups. In Express, a creative partner will help users move beyond templates with guided visual storytelling. Photoshop and Premiere Pro are being equipped with AI that can suggest image edits or identify key shots to speed up video editing.

Tokens of Wisdom

Civilization advances by extending the number of important operations that we can perform without thinking of them.

— Alfred North Whitehead, English mathematician and philosopher

Very nice!!!

I explain the larger game of A.I. Deception on my podcast here. The point is control. We either turn A.I. to our benefit or we will be consumed like the Borg. Let explain:

https://open.substack.com/pub/soberchristiangentlemanpodcast/p/ai-deception-2025?utm_source=share&utm_medium=android&r=31s3eo