Sound On: Veo 3 Talks, Claude 4 Codes, and OpenAI Builds Hardware

Google gives native voice to video, Claude 4 dominates software tasks, and OpenAI and Mistral rethink developer workflows.

Welcome to The Median, DataCamp’s newsletter for May 23, 2025.

In this edition: Claude 4, Google’s latest AI tools, OpenAI’s hardware push, and more.

This Week in 60 Seconds

Anthropic Releases Claude 4

Anthropic has released Claude 4, available in two versions: Claude Sonnet 4 and Claude Opus 4. Claude Sonnet 4 is positioned as a generalist model ideal for most AI use cases, particularly strong in coding, and is accessible to free users. Claude Opus 4, the flagship model, is designed for reasoning-heavy tasks such as agentic search and complex code workflows, leading on benchmarks like SWE-bench Verified for software engineering tasks. We’ll cover both models in the “Deeper Look” section below.

Key Announcements from Google I/O 2025

Google I/O 2025 heavily emphasized new generative AI capabilities. Highlights include Veo 3, a video generation model now offering native audio output. Flow, a new AI filmmaking tool, allows users to combine Veo, Imagen, and Gemini to generate individual shots with modular control. Imagen 4, Google's latest image generation model, promises advancements in photorealism and, notably, improved spelling and typography. Also introduced was Gemma 3n, Google's most capable on-device model designed for local, efficient AI processing on phones and laptops. We'll cover the biggest announcements in AI in more depth in the "Deeper Look" section.

OpenAI Introduces Codex, a New Software Engineering Agent

OpenAI has launched a research preview of Codex, a cloud-based software engineering agent integrated into ChatGPT for Pro, Team, and Enterprise users. Powered by the specialized codex-1 model, optimized for programming, Codex can handle multiple tasks in parallel, such as writing features, answering codebase questions, fixing bugs, and proposing pull requests. Each task runs in a secure, isolated cloud sandbox environment preloaded with the user's repository, allowing it to autonomously read and edit files, run commands, and execute tests. We’ve put together this Codex tutorial that you can use to get started.

Mistral AI Introduces Devstral for Software Engineering Tasks

Mistral has introduced Devstral, an agentic LLM specifically designed for software engineering tasks. Released under the Apache 2.0 license, Devstral aims to address the challenge of real-world software development by contextually understanding code within large codebases, identifying component relationships, and spotting subtle bugs. Devstral is light enough to run on a single RTX 4090 or a Mac with 32GB RAM, making it suitable for local deployment and privacy-sensitive enterprise repositories. The model is available for free download on HuggingFace, Ollama, Kaggle, Unsloth, and LM Studio. Our team has moved quickly and published a Devstral tutorial.

OpenAI Acquires Jony Ive's 'io' for AI Hardware Development

OpenAI has acquired "io," the AI hardware startup co-founded by former Apple design chief Jony Ive and OpenAI CEO Sam Altman, in a deal valued at approximately $6.4 billion. This move signifies OpenAI's significant entry into the AI-enabled consumer hardware market, shifting beyond its primary focus on software. Initial reports suggest exploration of devices like headphones and screenless AI-native wearables, with the first devices anticipated to launch in 2026.

Microsoft Build 2025 Emphasizes AI Agents

Microsoft Build is an annual conference for software engineers and web developers using Microsoft technologies. At Build 2025, Microsoft showcased a strong focus on AI agents and developer tools. Key announcements included significant enhancements to Microsoft Copilot Studio, allowing for multi-agent orchestration where AI agents can work together to achieve shared goals across systems and workflows. Microsoft also announced the open-sourcing of GitHub Copilot Chat in VS Code and extended native support for the Model Context Protocol (MCP) across Windows and Azure, aiming for an "open agentic web" where AI agents can connect and collaborate seamlessly.

Learn AI Fundamentals With DataCamp

A Deeper Look at This Week’s News

Anthropic Releases Claude 4

This week, Anthropic rolled out its newest generation of large language models, Claude 4, comprising Claude Sonnet 4 and Claude Opus 4. This newsletter gives you an overview, but we covered the subject in more depth in this blog on Claude 4.

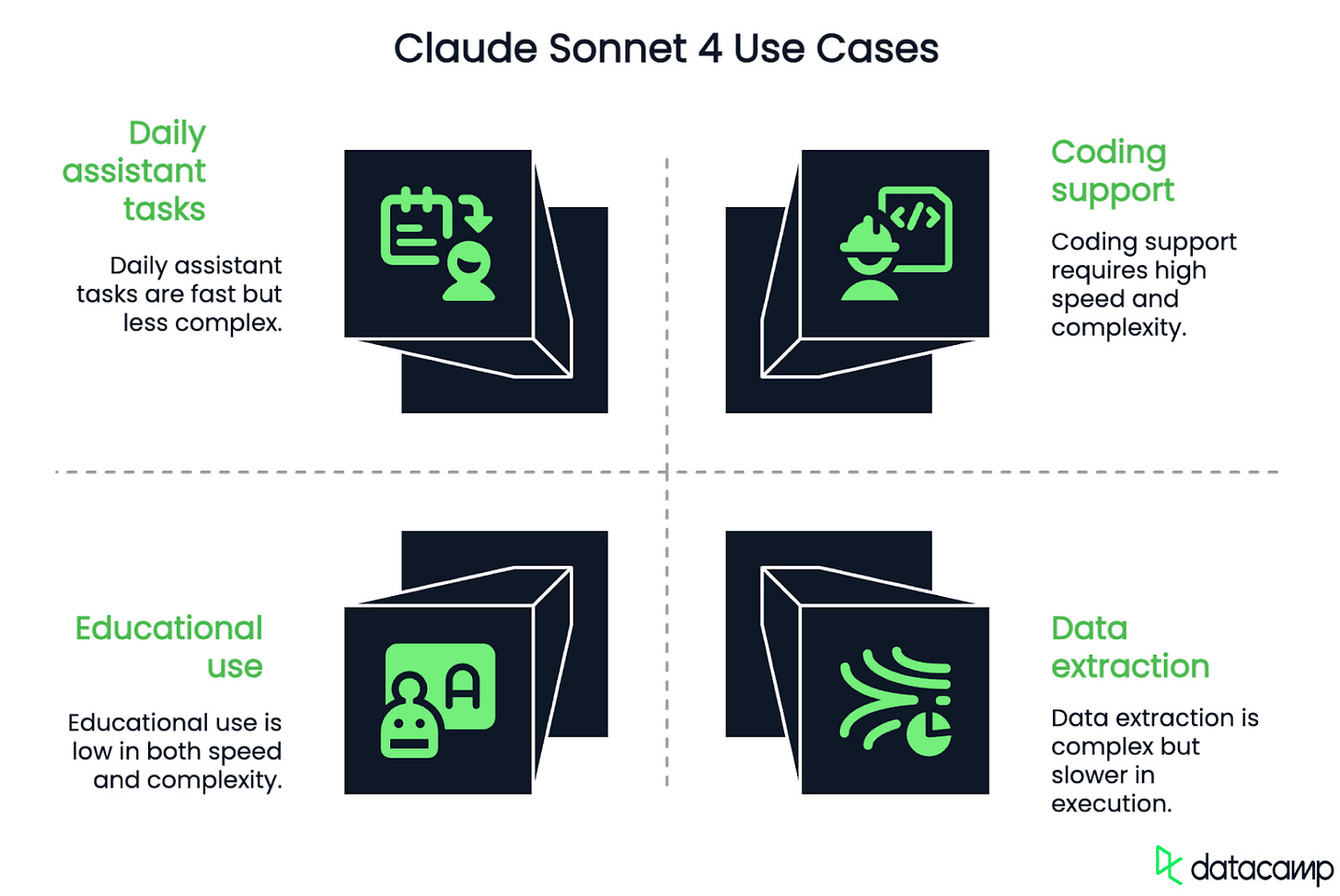

Claude Sonnet 4

Claude Sonnet 4 is positioned as the more general-purpose model within the Claude 4 family. It excels across common AI tasks such as coding, writing, question answering, and data analysis. Notably, Sonnet 4 is available to free users, making it a highly accessible option for a model of its quality.

It supports a 200K context window, allowing it to handle relatively large prompts and maintain continuity over long interactions, which is beneficial for analyzing extensive documents or reviewing codebases.

Compared to its predecessor, Claude Sonnet 3.7, this version boasts improved speed, better instruction following, and increased reliability in code-heavy workflows, supporting up to 64K output tokens.

Benchmarks indicate strong performance on real-world coding tasks, even slightly edging out Claude Opus 4 on SWE-bench Verified and significantly outperforming GPT-4.1 and Gemini 2.5 Pro in certain coding tasks.

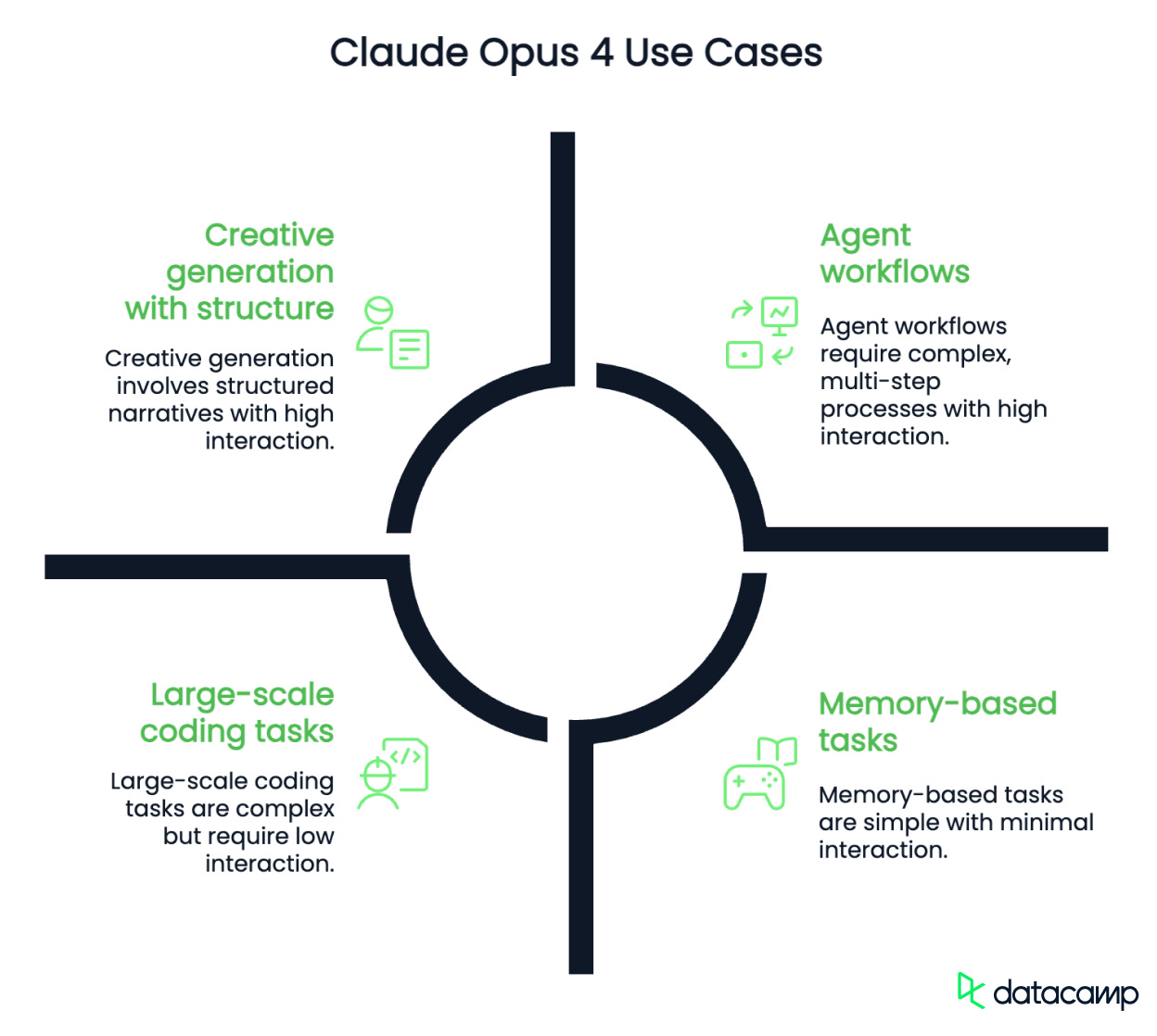

Claude Opus 4

As Anthropic's flagship model, Claude Opus 4 is engineered for tasks demanding deeper reasoning, extended memory, and structured outputs. Its core applications include agentic search, large-scale code refactoring, multi-step problem-solving, and in-depth research workflows.

Like Sonnet 4, Opus 4 also features a 200K context window. A key capability of Opus 4 is its "extended thinking" mode, which allows it to shift from fast responses to more deliberate reasoning, enabling tool use, memory tracking across steps, and generation of its own thought process summaries.

Anthropic markets Opus 4 as a high-end model for developers, researchers, and teams building advanced AI agents, with strong performance reported in areas like coding agents and multi-hour tasks. This model is exclusively available on paid plans due to its higher cost and complexity.

Google I/O 2025's AI Highlights

Google I/O 2025 showcased a broad spectrum of advancements across the AI landscape. While the conference featured numerous exciting announcements, we'll focus on three particularly impactful AI innovations in this newsletter. For a more comprehensive overview of all the significant AI announcements from Google I/O 2025, readers can explore our dedicated blog.

Veo 3

Veo 3 stands out as an important advancement in AI-powered video generation, primarily due to its new capability for native audio output. Unlike previous models and even competitors like Runway or Sora, Veo 3 allows users to generate videos that include sound directly, eliminating the need for additional editing steps.

Access to Veo 3 is currently limited to Google AI Ultra subscribers in the U.S. and is integrated within Google's new AI-powered video editor, Flow.

You can learn more in this blog on Veo 3, including how to create from scratch this spec ad for a fictional mint brand called Mintro:

Gemini Diffusion

Among the intriguing experimental technologies introduced at Google I/O 2025 is Gemini Diffusion, a novel model architecture designed to enhance the speed and coherence of text generation.

Moving beyond traditional language models that generate text token by token, Gemini Diffusion employs a method borrowed from image generation: it refines noise through multiple iterative steps.

This iterative refinement process allows the model to start with a rough approximation and progressively improve it, making it particularly effective for tasks that benefit from refinement and error correction, such as math, code generation, and text editing.

AI Mode in Search

Google's AI Mode represents a fundamental shift in the search experience, transforming it into a more conversational, chatbot-like interface. Distinct from AI Overviews, which provide summaries atop traditional search results, AI Mode takes over the full interface, allowing users to ask complex questions and engage in follow-up conversations within a dedicated tab. AI Mode is currently rolling out in the U.S., with additional features planned for Google Labs in the coming weeks.

Industry Use Cases

Shopify Debuts AI Store Builder

Shopify has introduced an AI Store Builder, enabling merchants to create online stores by simply entering descriptive keywords. This new offering uses AI to generate complete store layouts, including text and images, reducing the time and resources traditionally required for website design. Vanessa Lee, Shopify's Vice President of Product, highlighted that this tool allows merchants to translate their vision into reality much faster and without needing to code, aligning with Shopify's goal to simplify complex processes for entrepreneurs. The company also redesigned its POS app with AI-powered features for smoother navigation and smarter search, and is exploring agentic AI in e-commerce to streamline browsing, selection, and checkout into single dialogues.

Amazon Introduces AI-Powered Audio Product Summaries

Amazon is improving the shopping experience with a new generative AI-powered audio feature that synthesizes product summaries and reviews. This innovation provides customers with short-form audio highlights of key product features, saving them time by compiling research from product pages, customer reviews, and information across the web. These AI-powered shopping experts are currently being tested on select products for a subset of U.S. customers, with plans for broader rollout. The feature utilizes large language models to generate scripts and audio clips, building on Amazon's existing AI-powered shopping tools like Rufus (a generative AI shopping assistant), Shopping Guides, Interests, and Review highlights.

AI Humanoid Robots Transform Car Dealerships

The automotive industry is embracing AI humanoid robots to improve car dealerships, with Chinese manufacturer Chery leading the charge by showcasing its advanced sales assistant, Mornine, at the 2025 Shanghai Auto Show. Chery plans to roll out 220 Mornine units globally, aiming to shift towards robotic retail experiences. Mornine can explain car features, guide showroom tours, serve refreshments, and communicate in multiple languages, responding to voice and gesture commands with conversational abilities driven by advanced large language models. Chery envisions wider applications for these AI humanoids in various sectors, positioning them as daily companions and a vital part of the future customer journey in automotive retail.

Tokens of Wisdom

For AI to really take off, the focus has to be on how to deliver a business outcome to people, not what tools to build.

—William Falcon, CEO at Lightning AI

Learn more about the journey from an early AI idea to production by listening to this podcast with William Falcon, CEI at Lightning AI.

Salam