Your Brain on ChatGPT: Cognitive Debt, EU Report Insights & More

Relying too much on AI reduces the number of connections in your brain—but doesn’t AI also make you more productive?

Welcome to The Median, DataCamp’s newsletter for June 20, 2025.

In this edition: Google strengthens its Gemini 2.5 suite, Midjourney releases its first video model, a new paper warns that overreliance on ChatGPT may create cognitive debt, and more.

This Week in 60 Seconds

Google Releases Gemini 2.5 Flash-Lite, Stabilizes Pro and Flash

Google has launched Gemini 2.5 Flash-Lite in preview. It is the fastest and most affordable 2.5 model to date and is now available in Google AI Studio and Vertex AI. At the same time, Gemini 2.5 Pro and Flash have exited preview and are now generally available. Our suggestion: use 2.5 Flash for everyday tasks, 2.5 Pro for coding and more complex reasoning, and 2.5 Flash-Lite for high-volume, cost-sensitive tasks that don’t require deep thinking. You can find a few examples of Gemini 2.5 Pro’s performance in this blog.

Midjourney Releases Its First Video Model

Midjourney has launched the first version of its video model. The new model focuses on ease of use and price affordability, with the goal of enabling creative video generation at scale. It supports moving images built from prompts and lays the foundation for future work in 3D space and real-time simulation. The company says this release is part of a broader roadmap toward open-world, interactive environments. The timing is notable: just last week, we covered Disney and Universal’s lawsuit against Midjourney over copyright violations tied to image generation. If you missed it, you can catch up in our previous issue.

Research Suggests That Using AI Creates Cognitive Debt

Researchers at MIT Media Lab published a study exploring how using ChatGPT affects memory, reasoning, and ownership of ideas. In an experiment with 54 participants, those writing with AI showed weaker brain activity in areas linked to memory and executive function. The authors suggest that relying too much on LLMs could lead to “cognitive debt”. The paper has sparked widespread debate and confusion this week, amplified by misleading headlines and engagement-hungry takes from both influencers and mainstream media. We’ll take a balanced approach to this issue in the Deeper Look section.

Rebuttal Counters Apple’s Study on Reasoning Models

A new paper titled The Illusion of the Illusion of Thinking pushes back on Apple’s Illusion of Thinking study that we covered two weeks ago. The rebuttal, credited to Alex Lawsen (Open Philanthropy) and Anthropic’s Claude Opus—marking a rare case where a large language model is listed as a paper co-author—argues that the original findings were driven by experimental flaws, not reasoning failure. The paper shows that many supposed failures, like in the Tower of Hanoi task, were due to token limits rather than conceptual gaps, and that some river crossing problems were mathematically unsolvable to begin with. They also demonstrate that when models are prompted to generate code instead of exhaustive move lists, their reasoning holds up well.

EU Releases 2025 Generative AI Outlook Report

The European Commission’s Joint Research Centre released its 2025 Generative AI Outlook Report, a wide-ranging analysis of how GenAI is reshaping sectors from education to healthcare while raising policy concerns around disinformation, labour disruption, and cognitive offloading. The EU now ranks second globally in GenAI research output but lags behind in innovation and infrastructure investment. The report calls for coordinated action to support open-source models, expand AI literacy, and align deployment with EU values. We’ll explore the report in this week’s Deeper Look section.

New Course: Introduction to AI Agents

A Deeper Look at This Week’s News

ChatGPT Causes Cognitive Debt: A Balanced Take

A new study from MIT Media Lab researchers suggests that relying heavily on large language models could lead to "cognitive debt," potentially diminishing memory and deeper understanding. While the findings have been amplified by sensational headlines across media, a closer look reveals a more nuanced picture.

The study at a glance

The researchers recruited 54 participants, dividing them into three groups: one using an LLM (GPT-4o) , another using a traditional search engine, and a "Brain-only" group with no external tools.

Participants wrote essays over three sessions, with a fourth session involving a switch in tools for some groups (e.g., LLM users moving to "Brain-only").

Brain activity was monitored using EEG, and essays were analyzed for linguistic patterns and scored by both human teachers and an AI judge.

Reduced neural connectivity with LLM use

The study found that the "Brain-only" group consistently exhibited stronger and more widespread neural connectivity across all measured frequency bands (alpha, theta, delta) compared to the LLM group.

Source: Your Brain on ChatGPT

This suggests that writing without AI assistance engaged more internal cognitive resources for tasks like ideation, planning, and semantic retrieval. The LLM group, in contrast, showed weaker overall neural coupling, implying that the AI offloaded some of the cognitive burden.

Our balanced take: While LLMs may reduce the brain's "workout" for certain cognitive processes, this could also free up mental resources for other tasks. The paper itself notes this potential trade-off between immediate convenience and deeper cognitive engagement.

Impaired memory and ownership

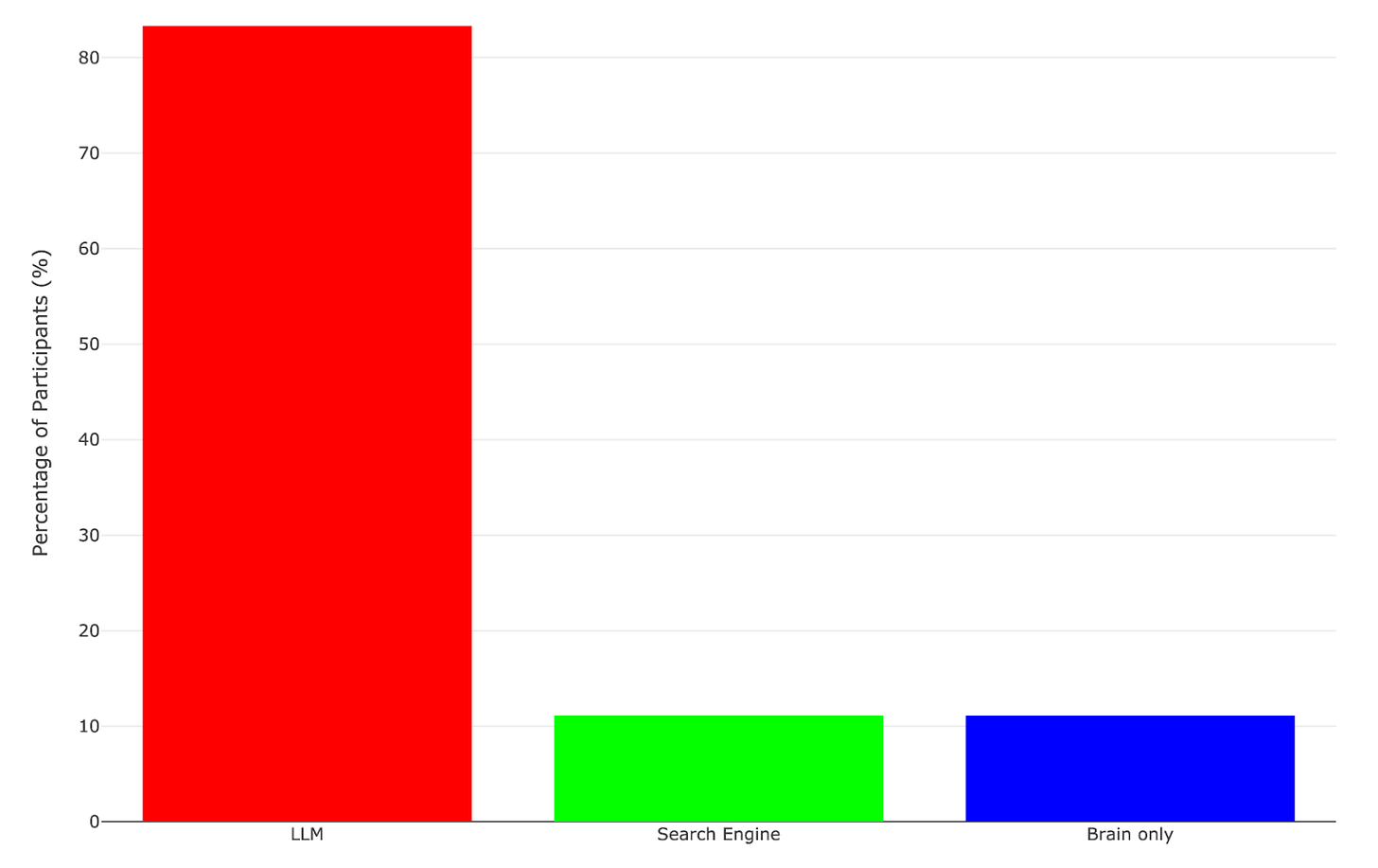

Participants in the LLM group consistently struggled to quote from their own essays, with 83.3% struggling to quote anything from their essays.

Percentage of participants who struggled to quote anything from their essays. Source: Your Brain on ChatGPT

They also reported a fragmented sense of ownership over their essays, with some claiming full ownership, others none, and many assigning partial credit.

Our balanced take: The memory and ownership issues highlight an important concern: if AI tools handle too much of the content generation, users may not internalize the material as effectively. That doesn’t mean we should stop using LLMs, but it does suggest a need for strategies that promote active engagement with AI-generated content, rather than passive acceptance.

Cognitive adaptation and "cognitive debt"

When LLM users were switched to the "Brain-only" condition in Session 4, their brain connectivity didn't immediately reset to a novice level, nor did it reach the peak levels of the consistently "Brain-only" group.

Instead, it showed an intermediate state, suggesting some "cognitive offloading" occurred previously, leading to less coordinated neural effort when the AI support was removed.

The paper defines "cognitive debt" as the long-term cost of deferring mental effort to external systems, potentially diminishing critical thinking and creativity.

Our balanced take: This “cognitive debt” isn’t necessarily a permanent deficit—it’s more of an adaptation. The brain is efficient and allocates resources where they’re most needed. If AI handles certain tasks, the brain may naturally focus less on those areas. Active learning and practice are key to strengthening neural networks, so a balanced approach—using AI for certain tasks while continuing to challenge ourselves with core cognitive work—could be the most beneficial.

The calculator analogy

This concept of "cognitive debt" isn't entirely new. Consider the calculator: while it allows us to perform complex calculations with speed and accuracy, its widespread use has also sparked debates about its impact on fundamental arithmetic skills.

Has the calculator "set us back" by reducing our reliance on mental math? In a way, perhaps for some basic calculations, but it has simultaneously freed up cognitive resources for higher-level problem-solving and understanding more complex mathematical concepts.

The core idea is that we offload the routine, low-level cognitive work to the tool, allowing our brains to focus on more intricate or novel challenges.

The EU's Generative AI Outlook: Ambition Meets Reality

The European Commission's Joint Research Centre (JRC) released its comprehensive 2025 Generative AI (GenAI) Outlook Report. The report shows how this transformative technology is reshaping industries, society, and policy across the European Union. However, it also raises concerns about disinformation, labor market disruption, and cognitive offloading.

The EU's position: research strong, innovation lagging

The report paints a nuanced picture of the EU's standing in the global GenAI landscape. While the EU demonstrates a strong research environment, ranking second globally in academic publications related to GenAI (China is the leader), it faces considerable challenges in translating this research into innovation and commercial success.

EU patent filings on GenAI, for instance, comprise only 2% of global filings, trailing behind China (80%), South Korea (7%), and the US (7%).

Source: Generative AI (GenAI) Outlook Report

Disinformation and information manipulation

The report emphasizes GenAI's capacity to produce highly convincing manipulative content rapidly and at scale, posing a significant threat to public discourse and democratic processes.

This includes the widespread generation of deepfakes and misleading narratives. The JRC highlights that the speed of AI-generated misinformation often outpaces the ability to effectively counter it.

Proposed action: To combat this, the report stresses the importance of promoting media and AI literacy among citizens and policymakers, alongside technical solutions like watermarking AI-generated content.

Labor market dynamics and skills gap

GenAI is expected to drive substantial productivity gains and foster job creation, but it also needs careful consideration of potential job displacement and occupational restructuring.

The report notes a shift in demand towards skills needed to navigate and engage with AI, such as critical thinking and emotional intelligence, potentially leading to a divide between high-skill and low-skill jobs.

Proposed action: Policymakers must focus on upskilling and reskilling the workforce to address these changing needs, fostering "workforce resilience and adaptability."

Cognitive offloading and critical thinking

A striking concern raised is the risk of over-reliance on AI for task completion, which "could undermine critical thinking, problem solving, and the role of educators."

The report explicitly warns that while GenAI can augment human creativity and productivity, it "can also dampen critical thinking, nuanced understanding, and the development of human skills while undermining our capacities to act in case such systems are not available".

This concept resonates with the "cognitive debt" discussed in our previous "Deeper Look" section.

Proposed action: The EU advocates for a "judicious AI adoption, especially in sensitive domains like education and healthcare." It calls for "balanced approaches" that encourage active engagement rather than passive consumption of AI-generated content.

Industry Use Cases

Google DeepMind’s Veo Contributes to Creating Short Film

Google DeepMind partnered with director Eliza McNitt and Darren Aronofsky’s Primordial Soup to create ANCESTRA, a short film combining live-action footage with generative video made using Veo and Imagen. You can watch the film here and the making-of here. The team used Gemini to craft prompts based on personal photos, then generated concept art and animation to match live scenes with precise camera motion and tone. Veo’s image-to-video and “add object” tools were used to realistically render elements like a newborn baby and scenes inside the human body.

AI Used to Improve Tropical Cyclone Forecasts

Google DeepMind and Google Research have launched Weather Lab, a platform for sharing AI-generated forecasts, including a new experimental model for tropical cyclones. Developed with the U.S. National Hurricane Center and Colorado State University’s CIRA, the model predicts a cyclone’s path, intensity, and size up to 15 days in advance. It combines historical reanalysis data with cyclone-specific records, offering emergency services and meteorological agencies a more accurate and longer-range picture for high-stakes decision-making.

ANZ Uses Fine-Tuned Llama Models to Automate Tasks

ANZ, one of Australia’s largest banks, is using Meta’s Llama models to improve its internal Ensayo AI platform, which supports software delivery and technical documentation. By fine-tuning Llama on datasets like API specs and incident histories, the bank has automated parts of its test generation and system mapping workflows. While the models don’t touch production or customer data, they’ve proven effective at handling low-context engineering queries. ANZ highlights open-source flexibility and internal oversight as key to making this deployment work within strict regulatory frameworks.

Tokens of Wisdom

We must guarantee that AI supports rather than harms young people, for example by avoiding over-reliance on AI-generated content in education, which could undermine critical thinking and lead to cognitive erosion.

—Ekaterina Zaharieva, European commissioner for startups, research, and innovation

Great newsletter

Impressive effort!!!