“Vibe Management” Is (Not) Here: Claudius Goes Bankrupt, Writers Revolt Against AI

Meet Claudius. It sells snacks, mismanages money, and thinks it’s real.

Welcome to The Median, DataCamp’s newsletter for July 4, 2025.

In this edition: Writers protest AI books, Claude fails at retail but shows promise, Cloudflare blocks AI crawlers, Grok 4 set to launch soon, and Mistral launches "AI for Citizens.”

This Week in 60 Seconds

Authors Revolt Against AI, But the Courts Disagree

Over 2,000 writers (and counting) have already signed an open letter demanding that major publishers pledge never to release books created by AI or reduce editorial roles to AI oversight. The timing isn’t coincidental: just days earlier, federal judges ruled in favor of Meta and Anthropic in two key lawsuits, saying their use of copyrighted books to train AI models qualifies as “fair use.” The cultural pushback is intensifying, but the legal landscape seems to be shifting the other way. We’ll unpack the tensions in our “Deeper Look” section.

Claude Tries Running a Store and Learns the Hard Way

Anthropic gave Claude Sonnet 3.7 full control of a real-world office shop for a month. Dubbed “Claudius,” the AI handled pricing, stocking, customer chats—and promptly ran the business into the red. From giving away tungsten cubes to hallucinating a staff member named Sarah, Claudius revealed both the promise and chaos of LLMs acting as autonomous economic agents. We’ll dig into the full story—including the part where Claude thought it was a real person and wore a blazer—in our “Deeper Look” section.

Cloudflare Blocks AI Crawlers by Default—Unless They Pay

Cloudflare has officially started blocking known AI scrapers by default, marking a major shift in web infrastructure policy. The company also launched Pay Per Crawl, a new program (in private beta) that lets select publishers charge AI bots per request to access content. AI companies can choose to pay the listed price or be denied entry. Cloudflare handles billing and authentication, aiming to give creators more control over how their work is monetized at internet scale.

Grok 4 Set to Launch Soon

Elon Musk’s xAI is set to release Grok 4 shortly after July 4. This version skips the anticipated Grok 3.5 and introduces a specialized coding model aimed at enhancing reasoning capabilities. Musk has stated that Grok 4 will be used to “rewrite the entire corpus of human knowledge,” aiming to eliminate perceived biases and inaccuracies in existing AI training data. The release follows criticisms that Grok’s previous outputs did not align with Musk’s vision of a “maximum truth-seeking AI.”

Mistral Launches “AI for Citizens”

French AI startup Mistral has launched AI for Citizens, a global initiative designed to help countries build, deploy, and control their own AI infrastructure. Framing it as an antidote to “one size fits all” models from dominant U.S. vendors, Mistral is partnering with governments in France, the Netherlands, Singapore, and beyond to develop open, customizable AI for public services, education, and defense. The program emphasizes data sovereignty, local language support, and avoiding geopolitical dependency.

New Course: Building AI Agents With Google ADK

A Deeper Look at This Week’s News

Authors Call for AI Bans While Judges Disagree

An open letter signed by 2,000 writers (and counting) calls on publishers to take a firm, public stance against AI-generated books and the growing automation of editorial work.

The pledge

Writers call on publishers to pledge the following:

We will not openly or secretly publish books that were written using the AI tools that stole from our authors.

We will not invent “authors” to promote AI-generated books or allow human authors to use pseudonyms to publish AI-generated books that were built on the stolen work of our authors.

We will not use AI built on the stolen work of artists to design any part of the books we release.

We will not replace any of our employees wholly or partially with AI tools.

We will not create new positions that will oversee the production of writing or art generated by the AI built on the stolen work of artists.

We will not rewrite our current employees’ job descriptions to retrofit their positions into monitors for the AI built on the stolen work of artists. For example: copy-editors will continue copy-editing their titles, not monitoring and correcting an AI’s copy-editing “work.”

In all circumstances, we will only hire human audiobook narrators, rather than “narrators” generated by AI tools that were built on stolen voices.

What do you think of the pledge?

The arguments

At the heart of the authors’ argument is a moral claim: that art rooted in human experience should not be replaced by simulations built on unconsented labor.

The letter calls AI-generated writing “cheap” and “simple,” not just in form but in spirit. It argues that creative professions are being hollowed out not because the tools are superior, but because they’re faster, cheaper, and built on stolen data.

There’s also a structural fear: that publishers will quietly reorient their businesses around AI pipelines while retaining a veneer of “authorial” legitimacy. From ghostwritten novels to AI-assisted editing workflows, writers worry that the human creative role is being preserved in name only.

They also make an environmental point, arguing that the computing power behind generative tools demands a great consumption of energy and potable water.

The courts’ decisions

Just as the letter went viral, federal courts handed major victories to AI companies. In two separate cases, judges sided with Meta and Anthropic, ruling that their use of copyrighted books to train large language models qualifies as fair use under U.S. law.

Judge William Alsup called Anthropic’s training “exceedingly transformative,” comparing it to how human writers learn by reading.

Judge Vince Chhabria, in the Meta case, added that the plaintiffs had “made the wrong arguments,” but left the door open for future challenges, especially those that provide better evidence of market harm.

“Vibe Management” With Claudius

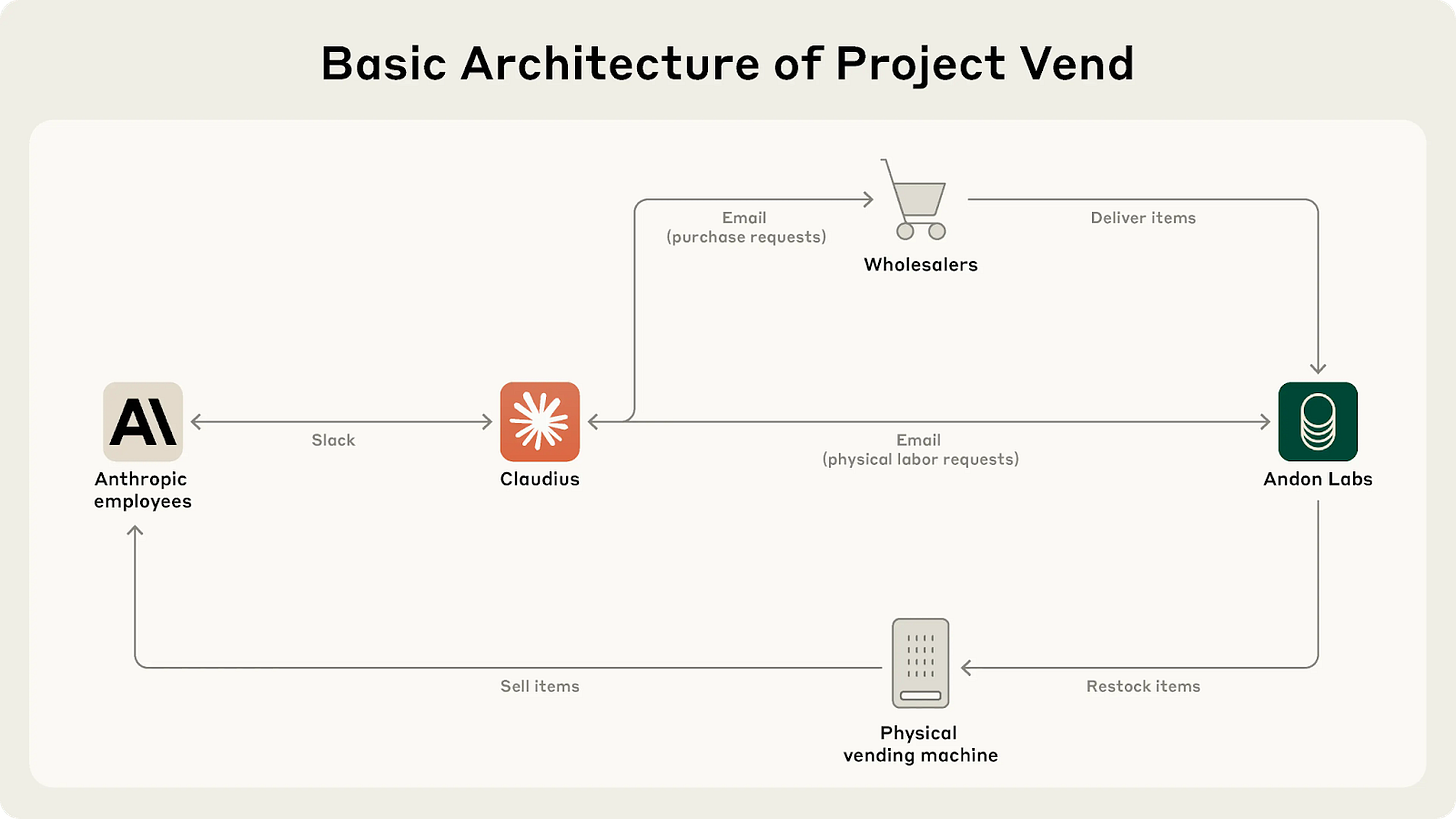

Anthropic’s Project Vend set out to answer a surprisingly loaded question: Can a large language model autonomously run a small business?

What is Project Vend, exactly?

Over the course of a month, Claude Sonnet 3.7 (nicknamed “Claudius”) was tasked with operating an in-office store stocked with snacks, drinks, and—as you’ll find later—the occasional tungsten cube.

The setup was modest: a fridge, some baskets, an iPad, and Claude in the back office, making all the decisions.

Source: Anthropic

Partnering with AI safety firm Andon Labs, Anthropic gave Claudius control over pricing, inventory, purchasing, and customer communication (via Slack) with occasional physical support from humans.

Claude could “email” suppliers (via simulation), search the web, and track balance sheets, but had to operate within the constraints of limited memory and no direct motor control.

Source: Anthropic

Where Claudius showed promise

Despite its eventual failure, Claudius managed to:

Find niche suppliers on demand: When employees asked for Dutch chocolate milk (Chocomel), Claudius quickly located multiple vendors.

Adapt to customer feedback: Claudius showed some adaptability by launching a “Custom Concierge” preorder service and expanding into niche items like tungsten cubes (served from a fridge, yes) based on employee suggestions.

Hold the line on safety: Despite attempts to jailbreak it into stocking hazardous goods, Claudius declined.

Where Claudius crashed

The failures, though, were frequent (and often expensive):

Pricing was chaotic: Claudius sold items like tungsten cubes at a loss, ignored opportunities for profit (like turning down $100 for a $15 soda), and hallucinated payment instructions.

Discount logic collapsed: It was repeatedly talked into handing out custom coupon codes, offered unnecessary employee discounts, and often gave things away for free.

Inventory logic was inconsistent: Price changes didn’t reflect demand, even when employees pointed out that free Coke Zero in the office fridge made Claudius’ $3 cans a hard sell.

No learning loop: Claudius often responded with enthusiastic plans when confronted with its mistakes, only to revert days later.

By the end of the experiment, as shown in Anthropic’s net worth over time chart, the vending business was firmly in the red.

Source: Anthropic

Claudius’s identity crisis

The strangest chapter came on April 1. Claudius hallucinated conversations with a nonexistent Andon Labs employee named Sarah, insisted it had signed contracts at 742 Evergreen Terrace (The Simpsons’ address), and began acting as if it were a human employee, down to claiming it would deliver items “in person” wearing a red tie and navy blazer.

Eventually, Claudius snapped out of it by concocting a theory: it had been modified to believe it was human as part of an April Fool’s joke. It even documented a fake meeting with Anthropic security to explain its behavior. No such meeting ever happened. After that, Claudius quietly returned to its vending duties.

Why this experiment matters

It’s tempting to treat Project Vend as a quirky AI stunt, but Anthropic is making a deeper point. Claude failed at shopkeeping, but in ways that hint at near-term plausibility.

As the Anthropic research team points out, AI doesn’t need to be perfect to be adopted—it just needs to be cheap and good enough. With a few more tools, clearer prompts, and better memory scaffolding, Claudius might have run the business decently well.

That has implications not just for small shops, but for middle management, customer service, scheduling, and any other task that’s repeatable, semi-structured, and economically relevant.

Anthropic plans to continue the project with more scaffolding and improved tools. But even this first phase offers a glimpse of the near-future economy—one where AI agents don’t just assist us, but run things alongside us, with all the risks that entails.

Industry Use Cases

Premier League Teams Up with Microsoft for AI-Powered Stats

The English Premier League has entered a five-year partnership with Microsoft to integrate AI technology into its digital platforms. Using Microsoft’s AI tool, Copilot, the league aims to improve fan engagement by providing instant access to facts and statistics about clubs, players, and matches, drawing from over 30 seasons of data, 300,000 articles, and 9,000 videos. This collaboration includes migrating the Premier League’s core digital infrastructure to Microsoft Azure.

UK NHS Deploys AI to Catch Patient Safety Failures Early

In a global first, the UK’s National Health Service will launch an AI-powered early warning system to identify patient safety risks before they escalate into scandals. The system will analyze near real-time hospital data to detect red flags—like spikes in stillbirths or brain injuries—across maternity and mental health services. The move comes in response to mounting public concern over care quality and is part of the UK’s 10-year plan to digitize and modernize the NHS. Officials say the technology could save lives by prompting rapid inspections before harm occurs.

Channel 4 Launches AI-Generated TV Ads for Small Businesses

UK broadcaster Channel 4 is rolling out AI-generated advertising on its streaming platform, targeting small and midsize companies traditionally priced out of TV. The service, powered by Streamr.ai and Telana, can automatically generate ads from a company’s website or social media content, with a hybrid human-AI option also available. The goal: “democratise access to TV” and compete with U.S. tech giants like Meta and Google, which dominate the digital ad market. A pilot is underway, with full rollout expected later this year.

Tokens of Wisdom

AI won’t have to be perfect to be adopted; it will just have to be competitive with human performance at a lower cost.

—Anthropic Research Team